Inside an AI's Brain: The Hidden Beauty of Neural Networks with DeepSeek R1 and Llama models

When we interact with AI language models like ChatGPT, Llama, or DeepSeek, we're engaging with systems that contain billions of parameters - but what does that actually mean? Today, we'll dive deep into how these massive neural networks are structured and explore fascinating ways to visualize their inner workings.

At their core, language models are intricate networks of interconnected artificial neurons. Each connection between these neurons has a weight, which we call a parameter. These weights determine how information flows through the network and ultimately influences the model's outputs. When we say a model like Llama-3 has 70 billion parameters, we're talking about 70 billion individual numbers that work together to process and generate text.

These parameters aren't random numbers - they're carefully tuned through training to recognize patterns in language. Think of them as tiny knobs that the model adjusts as it learns, each one contributing to its understanding of language, context, and meaning. These parameters are stored in tensor files.

The billions of parameters in language models are stored in specialized files called tensor files (typically with the .safetensors extension). These files organize the parameters in multi-dimensional arrays, similar to how spreadsheets organize data in rows and columns, but with the ability to extend into multiple dimensions. While the role of each array it is not relevant for this short experiment that we are doing, it is important to notice that each set of arrays (within the tensorfiles) do have a distinctive function in the arhitecture of the LLM.

These are the files that we will try to visualize. Typical visualization techniques for neural networks are: line plots, histograms, network graphs, 3D surface plots and heatmaps. Out of these options, for the current exercise, we've chosen heatmaps.

The heatmap visualization specifically uses this matrix structure to show weight patterns, where:

- Each row represents a parameter

- Each column represents a dimension

- Values in the cells are the actual weights

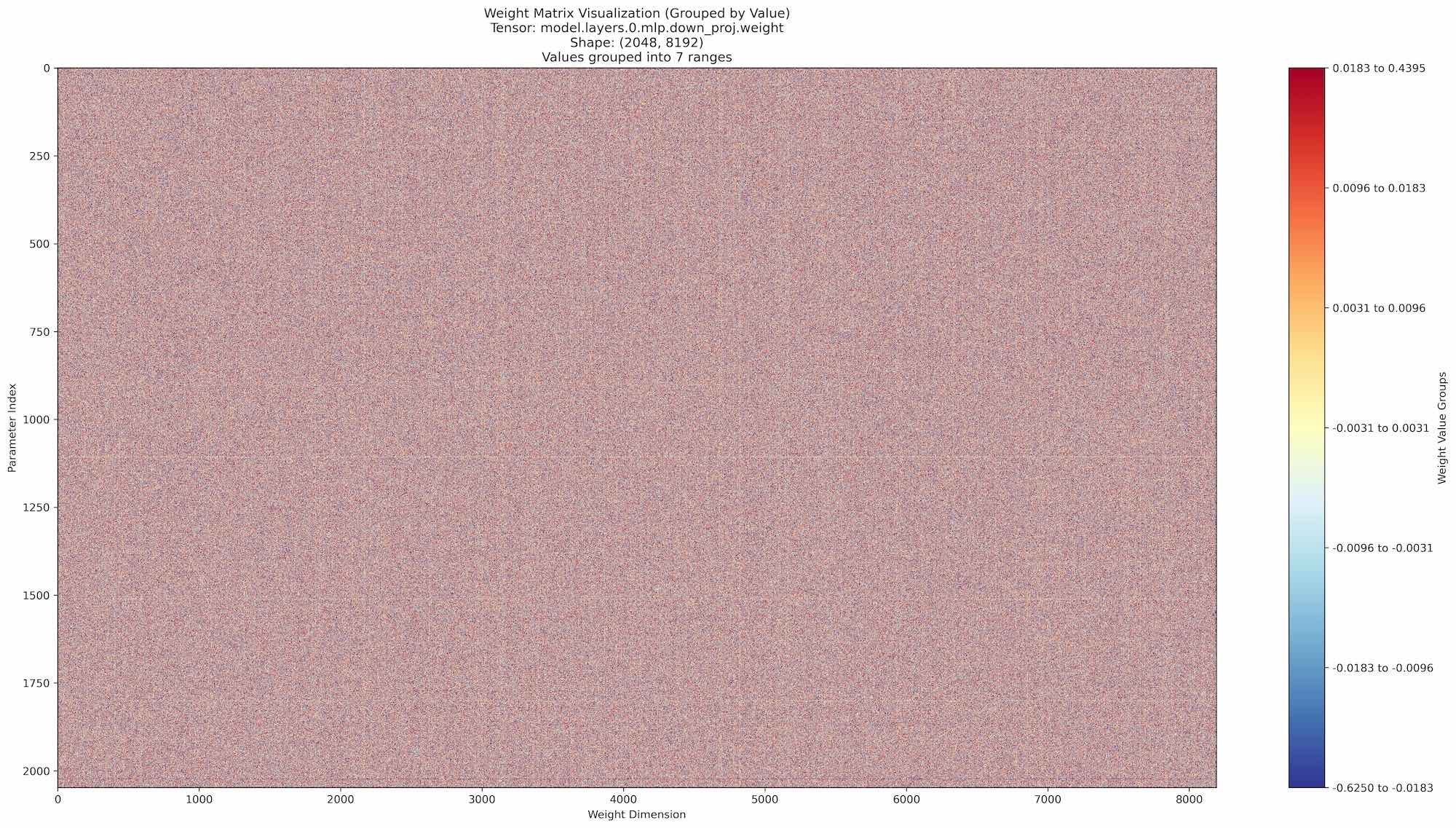

Let's start with our first model for the analysis: Llama 3.2 - 3B parameters. At first, we can mostly notice a "noise" pattern with no distinctive patterns emerging.

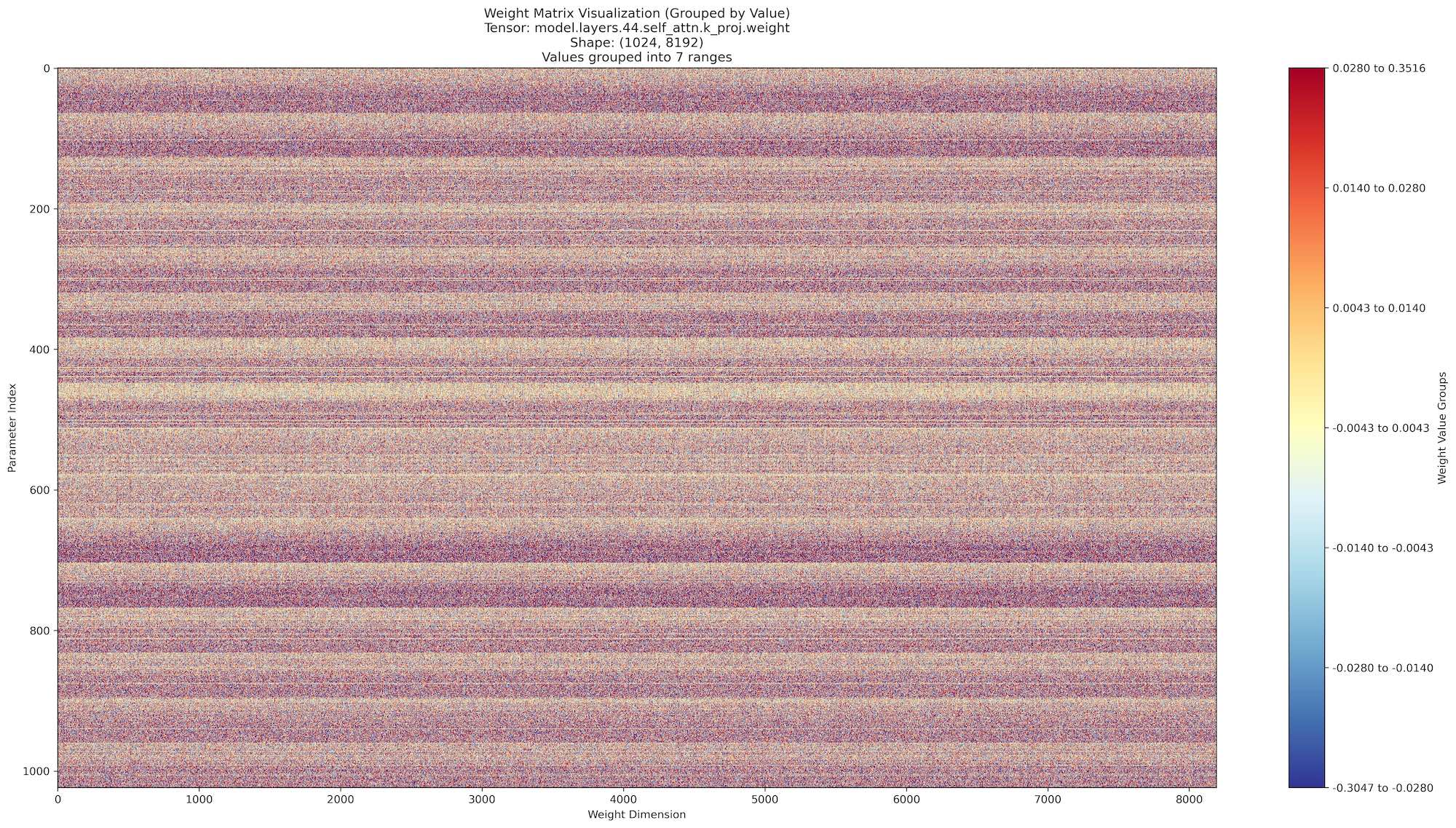

Let's examine a slightly higher parameter model, Llama 3.3 - 70B. While the noise pattern persists, we can observe some subtle patterns emerging in the form of vertical and horizontal lines. These patterns are visually intriguing, but they're not yet substantial enough for meaningful analysis.

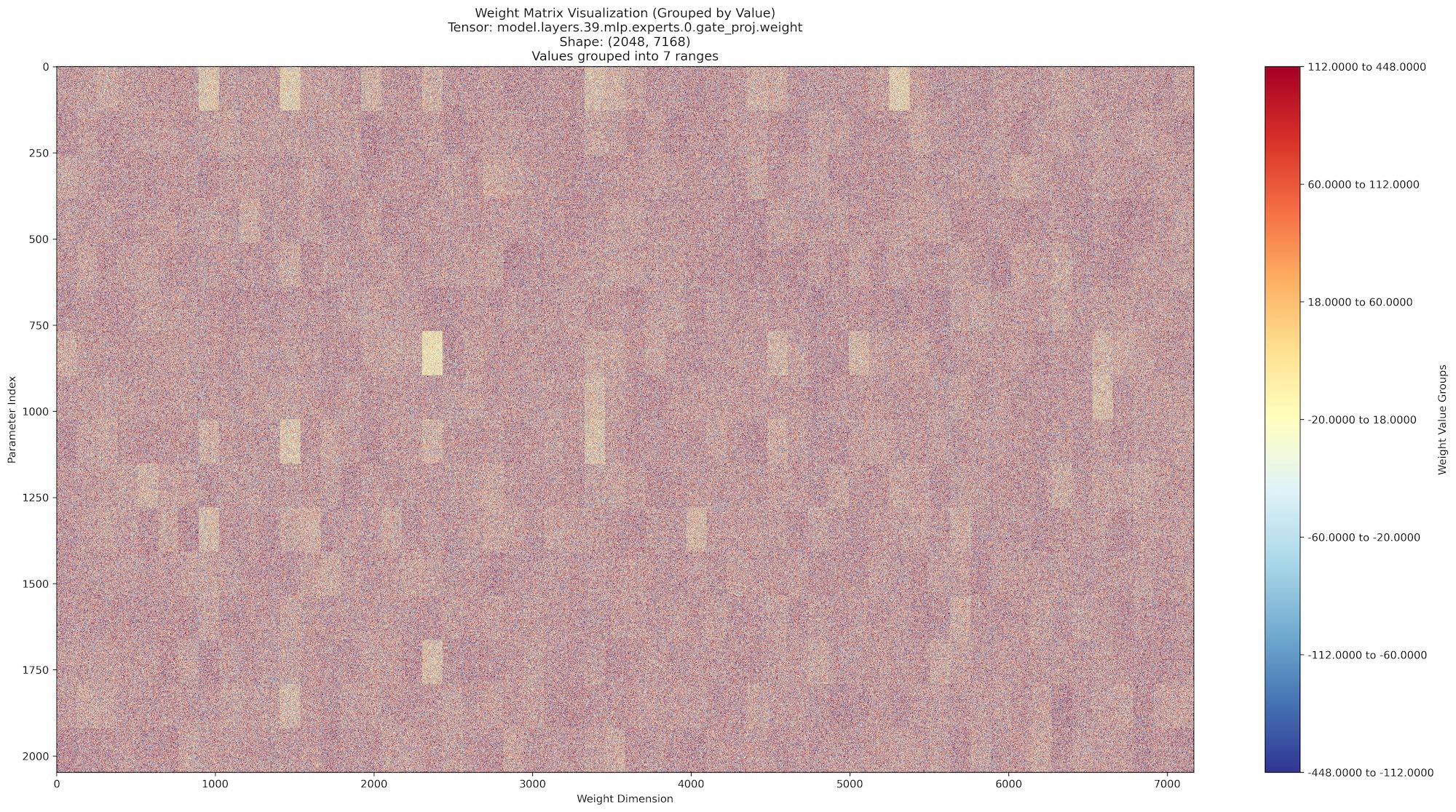

The last analysis focuses on the largest open-source model available at the time of writing this blog post: DeepSeek - R1 (version 3). At this stage, the visualizations become notably more intriguing. We observe distinct rectangular patterns emerging within the tensor file visualizations, suggesting a more structured organization of the model's parameters.

These rectangular formations raise an interesting question: Could they indicate some form of "measurable" visual intelligence within these models?

While it's tempting to draw conclusions, we should remain cautious in our interpretation. Without access to models with even higher parameter counts for comparison, it's premature to make definitive claims about what these patterns signify. Nevertheless, these observations provide an exciting conclusion to our experimental exploration.

If you're interested in seeing these visualizations in action, our CEO has created a short video walkthrough of this analysis, which you can find here.

Ready to Transform Your Business with Custom AI Solutions?

At Softescu, we specialize in developing intelligent AI applications that understand your unique business needs. Our team of AI engineers and machine learning experts can help you harness the power of Large Language Models and conversational AI while ensuring seamless integration with your existing systems. Whether you're looking to automate processes, enhance customer experiences, or gain deeper business insights, reach out to us for a personalized AI solution consultation.